✨ Lightbox Viewer 2.5.0-beta.3

In Edit Mode, hide objects that are hidden by the visibility icon in the scene hierarchy tab.

Fix object change events should no longer trigger in Play Mode, which was causing errors in the logs.

In Edit Mode, hide objects that are hidden by the visibility icon in the scene hierarchy tab.

Fix object change events should no longer trigger in Play Mode, which was causing errors in the logs.

The above changes have been contributed by mekanyanko (めかにゃんこ).

VRCConstraintBase.GetPerSourcePositionOffsets() is no longer public.

HVR Basis Face Tracking has been updated.

It should work better with ARKit avatars, as we were not requesting some face tracking traits correctly, so VRCFaceTracking would not send them to us. No avatar reupload is needed.

This update includes some performance improvements and some code preparation for future use by HVR Basis AvatarOptimizer, so that avatar optimization tools may detect which blendshapes should not be removed from the mesh.

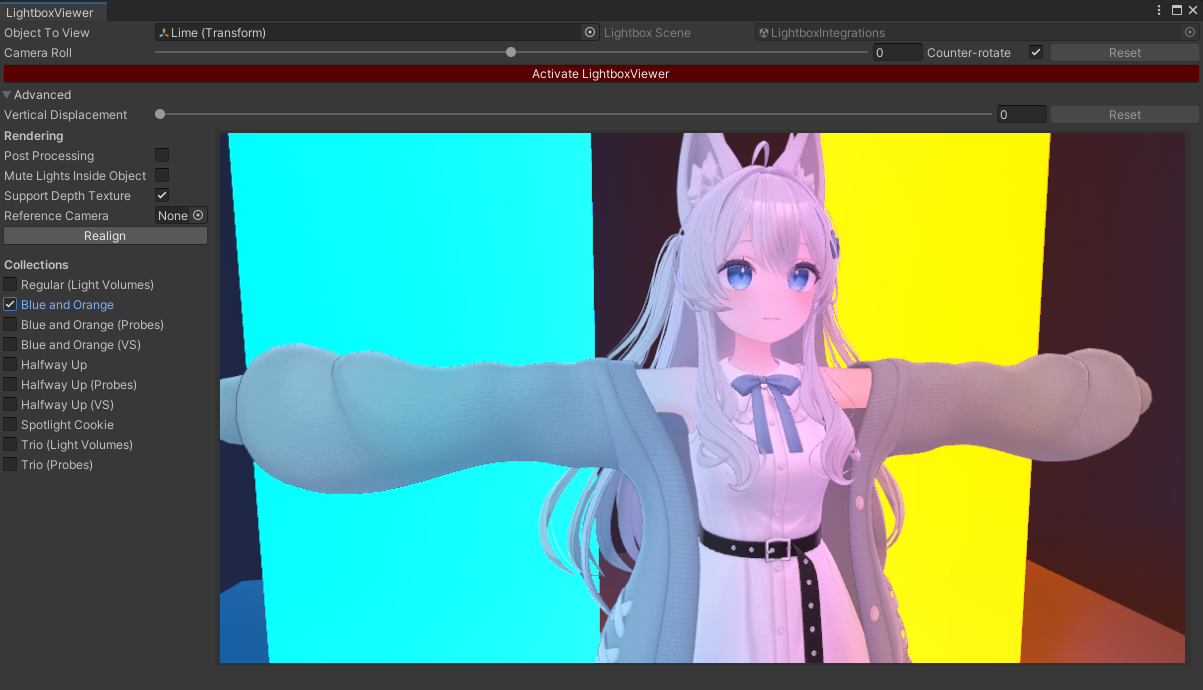

Lightbox Viewer has been updated to support URP projects.

Lightbox Viewer is now compatible with the following render pipeline configurations:

Features:

Changes:

Fixes:

HVR Basis Face Tracking has been migrated to the new Basis networking system.

Instructions for previous Face Tracking avatar users:

If you have previously used Face Tracking in Basis, do the following:

Remove all components that were part of the previous Face Tracking instructions. That includes:

To install the new Face Tracking, do the following:

The new face tracking has been modified to work with the Steam version of VRCFaceTracking (it now has OSCQuery), but it should also work with old versions of VRCFaceTracking that were not installed from Steam.

/avatar, it replies with a pre-determined message (check out response-avtr.json).response.json)._oscjson._tcp with the instance name VRChat-Client-XXXXXX where XXXXXX is a random number between 100000 and 999999._oscjson._tcp once when the service starts.Lightbox Viewer URP for the Basis Framework has been added as a new package.

This package is meant to be used on URP

This early version does not support post-processing, and it does not support traditional Light Probes.

As I am no longer focusing on VRChat content creation, the following packages will now install in ALCOM without warnings, even if VRChat introduces breaking changes:

VRChat Avatars package upper bound dependency raised to VRChat SDK999.

AacConfiguration.AssetContainerProvider to specify an asset container provider.IAacAssetContainerProvider to abstract asset container management.The above changes have been contributed by kb10uy (KOBAYASHI Yū) (first contribution).

The NDMF example in the Getting started page has been updated to demonstrate integration with this new API.

As specified in the changelog for the official release of Animator As Code V1, breaking changes had been planned, and will be applied starting this version:

These breaking changes are meant to be the last breaking changes for the lifetime of Animator As Code V1.

Add support for Light Volumes if it is installed in the project.

The lightboxes themselves do not change, so the differences are subtle. The Pink scene is the most notable because the left hand will be lit pink and the right hand will be lit purple.

If you want the previews to use light volumes, please understand that unlike avatar uploads, is it not sufficient to have a compatible shader like lilToon 1.10; you need the actual Light Volumes package installed in your avatar project.

This update is targeted specifically at Modular Avatar for Resonite users.

This update enables all Prefabulous Universal components to execute during builds targeting the Resonite app.

If you use Modular Avatar for Resonite, it is very likely you already have prerelease packages enabled in ALCOM, meaning you may update Prefabulous Universal to V2.2.0-alpha.0 using ALCOM.

If you use FaceTra Shape Creator, you need to download a patch linked here, no log-in required.

Support Modular Avatar for Resonite:

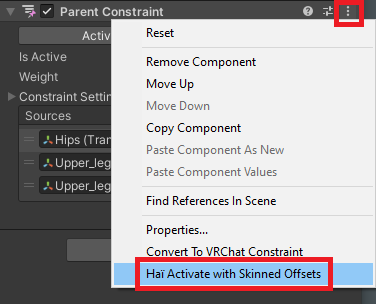

A new menu option named Activate with Skinned Offsets is added in the inspector of the Parent Constraint and VRC Parent Constraint components.

Pressing this menu option will behave similarly to the Activate button on the Parent constraint, but calculates different offsets: These offsets will make the Parent constraint behave more like weight painting/mesh skinning.

This is the same algorithm that is used by the Skinned Mesh Constraint Builder component. If you are already using this component, you do not need to use this.

A new component, Skinned Mesh Constraint Builder, has been introduced to a new package.

This component creates a parent constraint that moves with the closest polygon of a Skinned Mesh Renderer.

Use this component when you notice that attaching an object to your body is not as straightforward as parenting to a bone.

You may install the new package Haï ~ Skinned Mesh Constraint using ALCOM.

Starting from the 1st of February 2025, my repository listing will no longer officially support the VRChat Creator Companion package manager. Instead, it will support the ALCOM package manager.

In many cases you might still be able to install the packages from the VRChat Creator Companion.

However, if there are any issues occuring within the VRChat Creator Companion during the installation process, then it will be considered to be an issue within the VRChat Creator Companion software itself, and workarounds will no longer be provided.

If you still use the VRChat Creator Companion, and an error occurs in VCC during the installation of some packages from this repository, then:

I recommend that you install the ALCOM package manager.

ALCOM (GitHub) is a community-created package manager. It is functionally equivalent to the VRChat Creator Companion, but contains fixes to many bugs that had been reported in the VRChat Creator Companion for years.

Report the bugs you have encountered in VCC directly to VRChat Inc. through either the feedback forum, or the GitHub issues.

The bug you have encountered has most likely been reported already in 2022 or 2023.

There is no action to do if you already use ALCOM. Check out anatawa12's GitHub page.

A new knowledge sharing article has been published, "Running Modular Avatar on other apps".

The Starmesh Op. Transfer Blendshape component attempts to create a blendshape on a costume that mimics the movement of a blendshape from another mesh.

Try using this to transfer nail deformations, chest deformations, or hip deformations (i.e. Hip_big) from a base mesh to a costume.

Combine it with other Selectors to limit the areas affected by the transferred deformations.

This component may not always produce good results.

In addition, if you use other tools that attempt to fit a costume on an avatar it was not designed for (i.e. fitting a costume made for Manuka on a Lime base body), it will probably not work as this operator expects the mesh data to overlap, regardless of how bones are arranged in the scene.

The Animator As Code V1 API has been updated to improve support for third-party asset container management.

AacConfiguration.AssetContainerProvider to specify an asset container provider.IAacAssetContainerProvider to abstract asset container management.This change has been contributed by kb10uy (KOBAYASHI Yū) (first contribution).

The NDMF example in the Getting started page has been updated to demonstrate integration with this new API.

As specified in the changelog for the official release of Animator As Code V1, the breaking changes that were planned for V1.2.0 have been applied.

These breaking changes are meant to be the last breaking changes for the lifetime of Animator As Code V1.

For more details, see the full changelog.